Since last week, our self hosted Matomo creates a huge server load, up to crashing the database server.

Today we moved matoma and the (3,5 GB) database to another (better - 48 cores, 128GB RAM) webserver but the same issue here. The load avarage increases from 0.15 to 4.48 to 5.4.

The support told us, it’s caused is the following MySQL process:

-------------------------------------------------------------------------------------------------------------

| Id | User | Host | db | Command | Time | State | Info

| 12953 | ABC | MyHost.com:39350 | database | Execute | 48818 | Sending data |

SELECT /*+ MAX_EXECUTION_TIME(7200000) */

yyy.idvisit AS idvisit,

7 AS idgoal,

4 AS `type`,

yyy.idaction AS idaction,

COUNT(*) AS `1`,

ROUND(SUM(yyy.revenue_total),2) AS `2`,

COUNT(yyy.idvisit) AS `3`,

ROUND(SUM(yyy.revenue_subtotal),2) AS `4`,

ROUND(SUM(yyy.revenue_tax),2) AS `5`,

ROUND(SUM(yyy.revenue_shipping),2) AS `6`,

ROUND(SUM(yyy.revenue_discount),2) AS `7`,

SUM(yyy.items) AS `8`,

yyy.pages_before AS `9`,

SUM(yyy.attribution) AS `10`,

COUNT(*) AS `12`,

ROUND(SUM(yyy.revenue),2) AS `15`

FROM (

SELECT

num_total AS pages_before,

1 / num_total AS attribution,

r.idvisit AS idvisit,

lac.idaction AS idaction,

lvcon.revenue AS revenue_total,

1 / num_total * lvcon.revenue AS revenue,

1 / num_total * lvcon.revenue_subtotal AS revenue_subtotal,

1 / num_total * lvcon.revenue_tax AS revenue_tax,

1 / num_total * lvcon.revenue_shipping AS revenue_shipping,

1 / num_total * lvcon.revenue_discount AS revenue_discount,

1 / num_total * lvcon.items AS items

FROM (

SELECT /* sites 1 */ /* 2023-08-03,2023-08-04 */ /* Actions */ /* trigger = CronArchive */

log_conversion.idvisit, COUNT(*) AS num_total

FROM

piwik_log_conversion AS log_conversion RIGHT JOIN piwik_log_link_visit_action AS log_vpast ON log_conversion.idvisit = log_vpast.idvisit LEFT JOIN piwik_log_action AS lac_past ON log_vpast.idaction_name = lac_past.idaction

WHERE

log_conversion.server_time >= '2023-08-03 22:00:00'

AND log_conversion.server_time <= '2023-08-04 21:59:59'

AND log_conversion.idsite IN ('1')AND log_conversion.idgoal = 7

AND log_vpast.server_time <= log_conversion.server_time

AND lac_past.type = 4

GROUP BY

log_conversion.idvisit

) AS r

LEFT JOIN piwik_log_conversion lvcon ON lvcon.idgoal = 7 AND lvcon.idvisit = r.idvisit

RIGHT JOIN piwik_log_link_visit_action logv ON logv.idvisit = r.idvisit

LEFT JOIN piwik_log_action lac ON logv.idaction_name = lac.idaction

WHERE logv.server_time >= '2023-08-03 22:00:00'

AND logv.server_time <= '2023-08-04 21:59:59'

AND logv.idsite IN (1)

AND lac.type = 4

AND logv.server_time <= lvcon.server_time

) AS yyy

GROUP BY yyy.idaction

ORDER BY `9` DESC

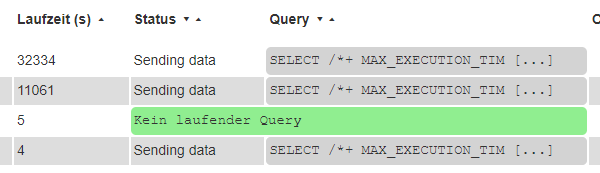

which is running 5 times at the moment.

Are there any known issues or topics?

Thanks and best regards