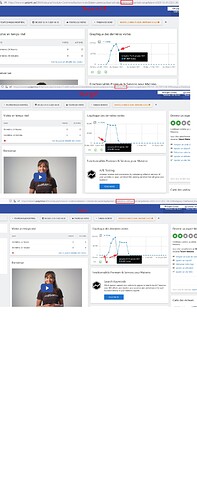

In this example we have two site ids,

In site id 25 we only have data from 1 march 2016 up to 14 September 2022 and in site id 2, we have data from 15 September 2022 until today.

The idea is to merge all the data in site id 2.

You must run this sql query on the database of your matomo installation, but remember to change the date range and ids of sites you want to merge first.

I would like to ask the Matomo team to implement this option.

delimiter //

CREATE PROCEDURE merge_sites(

IN id_old INT,

IN id_new INT,

IN month_start VARCHAR(7),

IN month_end VARCHAR(7),

IN day_end INT

)

BEGIN

DECLARE done BOOL DEFAULT FALSE;

DECLARE tablename CHAR(255);

DECLARE cur_tablename CURSOR FOR

SELECT TABLE_NAME

FROM INFORMATION_SCHEMA.TABLES

WHERE (

TABLE_NAME >= CONCAT("matomo_archive_numeric_", REPLACE(month_start, '-', '_'))

AND TABLE_NAME <= CONCAT("matomo_archive_numeric_", REPLACE(month_end, '-', '_'))

) OR (

TABLE_NAME >= CONCAT("matomo_archive_blob_", REPLACE(month_start, '-', '_'))

AND TABLE_NAME <= CONCAT("matomo_archive_blob_", REPLACE(month_end, '-', '_'))

)

;

DECLARE CONTINUE HANDLER FOR NOT FOUND SET done = TRUE;

DECLARE CONTINUE HANDLER FOR SQLEXCEPTION BEGIN END;

OPEN cur_tablename;

myloop: WHILE NOT done DO

FETCH cur_tablename INTO tablename;

IF NOT done THEN

SET @sql = CONCAT(

'UPDATE ', tablename,

' SET idsite = ', id_new,

' WHERE idsite = ', id_old,

' AND date1 <= \'', DATE_FORMAT(

STR_TO_DATE(

CONCAT(YEAR(DATE_ADD(NOW(), INTERVAL -1 MONTH)), '-', MONTH(DATE_ADD(NOW(), INTERVAL -1 MONTH)), '-', day_end),

'%Y-%m-%d'

),

'\''

),

';'

);

PREPARE stmt FROM @sql;

EXECUTE stmt;

DEALLOCATE PREPARE stmt;

END IF;

END WHILE;

CLOSE cur_tablename;

UPDATE matomo_site

SET ts_created = TIMESTAMP(CONCAT(month_start, '-01 00:00:00.000'))

WHERE idsite = id_new;

UPDATE matomo_log_link_visit_action

SET idsite = id_new

WHERE idsite = id_old;

UPDATE matomo_log_visit

SET idsite = id_new

WHERE idsite = id_old;

UPDATE matomo_log_conversion

SET idsite = id_new

WHERE idsite = id_old;

UPDATE matomo_report

SET idsite = id_new

WHERE idsite = id_old;

UPDATE matomo_report_numeric

SET idsite = id_new

WHERE idsite = id_old;

UPDATE matomo_report_blob

SET idsite = id_new

WHERE idsite = id_old;

UPDATE matomo_sequence

SET idsite = id_new

WHERE idsite = id_old;

UPDATE matomo_session

SET idsite = id_new

WHERE idsite = id_old;

UPDATE matomo_site_setting

SET idsite = id_new

WHERE idsite = id_old;

UPDATE matomo_site_url

SET idsite = id_new

WHERE idsite = id_old;

UPDATE matomo_tracking_goal

SET idsite = id_new

WHERE idsite = id_old;

UPDATE matomo_user

SET idsite = id_new

WHERE idsite = id_old;

UPDATE matomo_user_dashboard

SET idsite = id_new

WHERE idsite = id_old;

END;

//

DELIMITER ;

CALL merge_sites(

25, -- source site id

2, -- target site id

'2016-03', -- start month

'2022-09', -- end month

14 -- day_end

);